Data Cataloging and Data Visualization: Two Imperatives in Today’s Organizations

This is an AArete Financial Services insight

With the proliferation of data, cataloging is becoming a necessity for every big company. Once that system is in place, using data visualization techniques that tell a story can bring great benefits. AArete’s Priya Iragavarapu discusses modern data cataloging systems and three factors in designing good data visualization.

Data Cataloging: No Longer Just a “Nice to Have”

As the volume and variety of data grow exponentially, so does the importance of data catalogs and data visualization. The unchecked growth of data with evolving attributes poses a significant challenge—it makes managing metadata increasingly challenging.

Enterprise data management is particularly affected by this data glut. With complicated nested data attributes, it’s difficult for stakeholders to take a snapshot of data, explore the metadata, and then build a data catalog or business glossary, re-using it as a reference in perpetuity.

Therefore, data cataloging is not only an inevitable imperative but doing it is required on a real-time basis, making it possible for crawling datasets to identify metadata. These data catalogs perform two tasks: document the metadata accurately and effectively and flag any anomalous metadata for discrepancies.

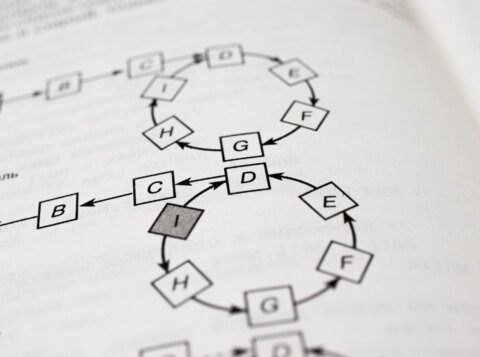

Another reason there’s an increased need for data cataloging is the prevalence of hybrid cross-collaborating teams with dotted line connections within matrix organizations. Every team across the entire data lifecycle needs to understand data beyond their immediate scope of expertise to perform their function effectively. When data cataloging is operated in this fashion, it allows the data lineage to be tracked to understand how the data catalog is evolving and changing with each step within the data pipeline.

Organizations should seek data cataloging solutions with the following key features. First, the data cataloging solution must be able to crawl data automatically and dynamically sense the data attributes, data types, and data profiles. Additionally, many industry-leading solutions incorporate user input to create a data dictionary or business glossary. Desirable data cataloging programs are also able to translate statistics into user-friendly visuals. Lastly, a robust data catalog solution should not merely show metadata but allow users to take actions from that insight.

However, there are tradeoffs when comparing newer, augmented data catalog capabilities to more traditional approaches. The traditional approach refers to building a custom script to crawl data and writing data pertinent to metadata in a table for further analysis.

It’s also a rather manual process to keep track of when and how often to run the script, which has the disadvantage of batch-style processing. The more sophisticated custom solutions consist of real-time streaming data crawlers, which determine the metadata and sense any changes in real time. This program is ideal for many low-latency applications. However, these advanced data cataloging solutions come with concerns regarding resources, compute complexity, and cost.

Complex programs may also pose a security risk. Systems that provide the most opportunity for automated discovery raise the most concerns with operational IT professionals. They are being asked to either allow a breach in their firewall so that a cloud-based solution can gain access or install a new system on-prem.

If these concerns dissuade an organization from taking the modern approach, there are plenty of off-the-shelf products that organizations can leverage for data cataloging solutions. These may or may need to integrate better, depending on the technology stack and legacy systems present within the organization. But organizations must identify where they fit in the spectrum—from building a custom solution to using an off-the-shelf product. It all hinges on the nature of the data and the organization’s needs.

Data Visualization Must Tell a Story

Once a data cataloging system has been chosen and implemented, organizations need to figure out how to make the best possible use of that data.

Data visualization technology has advanced significantly in the past decade, producing advanced software such as Tableau, Power BI, Qlik, Looker, and IBM Cognos. Modern tech companies are eager to incorporate data visualization into their practices, yet many struggles with choosing a program that best suits their needs. Here are several aspects that organizations should consider before deciding which data.

Size & Source of the Data to be Visualized

The first consideration is the size as well as the source of the data. These qualities affect which software is appropriate and whether two tools must be stitched together to fill the organization’s data visualization needs appropriately. For example, a company stores its data in cold storage such as S3, and this S3 is connected directly to Tableau. Even if Tableau provides that connector, the performance of the visualization task will suffer. Tableau is a remarkable visualization tool, but if one places the onus of querying on Tableau, it will affect performance and latencies. In this case, Qlik is a much better tool since it has an in-built query engine—efficiently running a query on large datasets and cold storage. Again, this is not a critique of Tableau; it simply means the user must adequately assess the strengths and weaknesses of visualization tools and align them with their organization’s goals.

Organization’s Technology Stack

Another factor is the organization’s technology stack. This must be carefully thought through before committing to an individual data visualization tool. For example, an organization may already be invested in an Azure Cloud or IBM ecosystem or a different technology stack of choice. A couple of examples: If a company uses the IBM ecosystem, it would be logical to use IBM Cognos; or if the organization used Azure Cloud, Power BI would be the smartest choice. Tools can be mixed and matched only when there is no unified strategy pertinent to a one-stop shop technology stack. For the most part, most tools are built in such a way that they have connectors to mix and match with other tools.

The Extent of Data Pre-Processing Needed

The final factor to be considered is data pre-processing. Ideally, visualization queries should directly query data and be able to filter sort, and aggregate data within the tool. If the pre-processing is complicated, it puts an additional onus on the data visualization program, which affects performance. Therefore, the pre-processing data engineering work must be handled outside the tool. An assortment of pre-processing tools corresponds with their data visualization equivalents. For example, Tableau uses Tableau Prep. By carefully considering the extent of the required data preparation, the user can predict the performance of the data visualization and the pace at which the data is visualized.

Apart from the above considerations, organizations choosing data visualization initiatives must recognize that color, chart type, and visualization type choices determine the impact that data visualization will have on their business. Most effective data visualization solutions combine art with science.

Most importantly, strong data visualization software doesn’t just spit out scatterplots, heat maps, pie charts, or bar graphs—it tells a story. Industry leaders rely on these tools because they can carve out narrative arcs without sacrificing the ability to experiment with several approaches. As data visualization technology advances, these trends will become more and more apparent, leading companies using visualization tools to efficiently develop data products more and more in line with consumer demand.

With the proliferation of data comes potential benefits and serious responsibility for organizations. To be most effective, they need systems to understand what they have, ensure the data is up-to-date and retrievable, and parlay the data into visualizations that help tell a story. Many tools exist that, used wisely, can help organizations achieve all these goals—they need to know what to use and how to use them.

Originally published in Spiceworks, November 2022

Learn more about AArete’s Digital & Technology solutions