A Use Case for In-Memory ETL: Leveraging Apache Spark and DataFrames to Handle Complex Business Rules

Moving your reporting infrastructure to the cloud has many advantages… but how do you get it there? Read our blog to see how we used a tech stack comprised of Apache Spark, Snowflake, and Looker to achieve a 5x improvement in processing performance.

Recently we were tasked with migrating a client’s reporting infrastructure (Oracle and IBM DataStage) from on-prem to the cloud.

Data Warehouse and Analytics Platforms

For the data warehouse platform a core requirement was to scale storage and compute for very sophisticated set analytics and calculations. However the usage concurrency was very lumpy.

We selected Snowflake because of its clustered, shared data architecture that would allow us to scale dynamically and cost-efficiently, and because the client was already on AWS. (More on that in a later blog).

The client selected Looker because of its fast cloud-based deployment model and its integration with Snowflake. (I’ll unpack this and comparisons of Looker vs. other tools in a future blog).

Data Engineering

The legacy ETL was an on-prem implementation of IBM DataStage with heavy use of PL/SQL procedures in Oracle. The implementation was designed for 30% ETL and 70% ELT. With expensive connectors to Salesforce and SAP, DataStage was deemed too expensive to maintain, and the architecture group challenged us to find an open source alternative.

A core requirement for the data engineering stack was to support transforming large amounts of data and calculating metrics at multiple levels over several years of history. In addition, we needed to ingest CRM data in real-time. Finally, we were interested in options on the cloud that could be supported by Snowflake’s extensive set of drivers, connectors, and utilities.

We selected Apache Spark because of its support of big data pipelines and the flexibility in programming complex business logic. Another reason: Snowflake ships with a Spark connector which supports bi-directional data movement between Snowflake and Spark clusters.

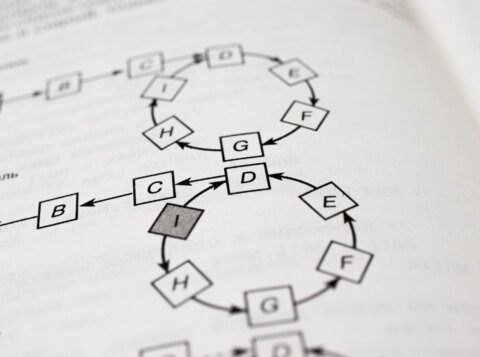

To stay on the AWS system, we planned to host the Spark cluster in AWS EMR (see Fig. 1).

Why Apache Spark for our Use Case?

A foundational component of the analytics depended on metric calculations at a point in time. While Looker can support a number of transformations through its LookML model, we were forced to design a data model with historical snapshots to support the more complex set-based calculations.

This data model required processing of a sets of intermediate results stored in temporary tables. On these temp tables, we had to process additional join operations before loading the final staging result sets. With DataStage and the “ELT” in Oracle, we had noticed a deterioration in job performance as data volumes increased over the past few year.

Our thesis was that using Spark SQL along with its DataFrame API could give us performance improvements. DataFrames are equivalent to tables in relational databases, just loaded in memory (and memory is available for cheap and in large amounts on the cloud!).

We ran a number of pilots loading DataFrames with source data from Salesforce and matching with ERP systems and transforming data in a distributed manner. Since the heavy lifting of the data processing was done in-memory, we found a 5x improvement in job performance with similar amounts of raw source data in production.

Next Steps

We’re building a pipe to inject real-time Salesforce and ERP data into the ”hybrid” data warehouse and expose pipeline increments in Looker. To that end, we’ve set up event-based notification streams in AWS EMR.

We’re also using OCR to read PDF-based contracts, converting to XML, and shredding into non-relational data structures that are more resilient to changes in the XML schema.